CAIDAS Trains First Purely German Large Language Model

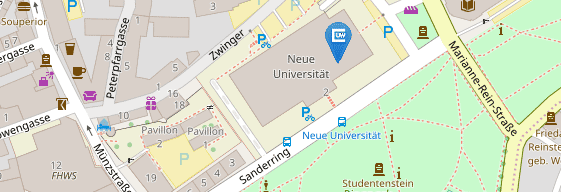

11/15/2024Milestone for German-language large language models: the first purely German large language model has been trained at the University of Würzburg. It was created at the Centre for Artificial Intelligence and Data Science (CAIDAS).

Julius-Maximilians-Universität Würzburg (JMU) has set a new milestone for German-language Large Language Models (LLMs). Two new models have been successfully trained: the LLäMmlein 120M and the more powerful LLäMmlein 1B with over a billion parameters. For the first time, this was done exclusively in German.

The models will be made available to the public on 15 November 2024.

German Training of the Language Models

Until now, many large language models have mainly been trained on English data sets. This is precisely where the Chair of Data Science at CAIDAS at the University of Würzburg, headed by Professor Andreas Hotho, came in: "With LLäMmlein, we have created models that have been trained exclusively on German-language data. This not only puts the focus on German language processing and opens up new possibilities for applications that are specifically tailored to the German language, but also the targeted investigation of German language models."

A Transparent and German Data Set

In order to train the models, the researchers cleaned up and prepared the existing multilingual RedPajama dataset specifically for German. They broke down the texts into the smallest meaningful units, so-called "tokens" - these can be individual words or parts of words. The end result was a data set with three trillion such German "tokens". They also published a specialised program, a so-called "tokenizer", which breaks down the German texts into these units in a way that is particularly well suited to the peculiarities of the German language.

The publication of the data set, as well as several model checkpoints from the training phase, enables researchers to better understand and further develop the learning dynamics of the models.

To monitor the progress of the training and evaluate the final result, the self-developed benchmark "SuperGLEBer" with 29 tasks was used to evaluate German LLMs.

Diverse Models for Different Applications

"We present two models of different sizes: LLäMmlein 120M and 1B. These provide an insight into how model size influences performance. We also provide special chat variants that are optimised for interactive applications," explains Andreas Hotho.

The different models allow developers and researchers to select the right model for their specific requirements. A preview of a Bavarian variant of the language model already exists.

Outlook and Publication

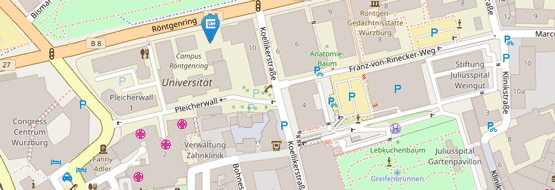

The project is the prelude to the development of even larger models. The extensive calculations have so far been carried out at the NHR@FAU cluster in Erlangen and required 50,000 computing hours on A100 GPUs with 80GB of memory for the 1B model. The training took around 5 weeks on 64 GPUs. The smaller model was calculated on the university's new JuliaV2 cluster and required around 10,000 L40 GPU hours.

The Chair of Data Science is part of CAIDAS, the JMU Centre for Artificial Intelligence and Data Science. The centre is supported by the Bavarian High Tech Agenda, which made this research possible in the first place.

Links to

Contact

Prof. Dr Andreas Hotho, Chair of Data Science, T +49 931-31-88453, hotho@informatik.uni-wuerzburg.de