Dr. rer. nat. Christian Reul

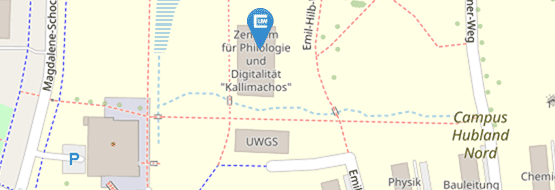

University of Würzburg

Centre for Philology and Digitality

Emil-Hilb-Weg 23

Campus Hubland Nord

D-97074 Würzburg

Room: 00.010

Phone: +49 931 / 31 - 80722

Fax: +49 931 / 31 - 88427

christian.reul@uni-wuerzburg.de

Qualifications and Career

- 09/2023: Offering of Academy Professorship (W2) for “Research Software Engineering in the Digital Humanities” (Academy of Sciences and Literature Mainz / University of Marburg) – declined

- Since 10/2018 (provisionally until 08/2021): Head of the Digitization Unit of the Centre for Philology and Digitality (University of Würzburg)

- 2015-2020: Doctoral studies at the Chair for Artificial Intelligence and Knowledge Systems (University of Würzburg). Doctoral thesis: An Intelligent Semi-Automatic Workflow for Optical Character Recognition of Historical Printings

- 10/2015-10/2018: Research assistant at the Chair for Artificial Intelligence and Knowledge Systems (University of Würzburg)

- 2009-2015: Studies of Computer Science (Master, specialized in Intelligent Systems) at the University of Würzburg.

Major Research Grants

- Reference project "Digital fairy tale reference library by Jacob and Wilhelm Grimm" (DFG, 2024-27)

- Digitisation and indexing of historical textbooks on religious education and development of a knowledge base for educational history research (DFG, 2024-27)

- Robust and performant methods for layout analysis in OCR-D (DFG, 2023-25)

- OCR4all-libraries – Full-text transformation of historical collections (DFG, 2021-24)

Project Participations as Co-Investigator and/or Technical Partner (selection)

- The photo poem in illustrated magazines between 1895 and 1945 (DFG, 2024-27)

- Musical Instruments in Ancient Mesopotamia (MIAM): Terminology, Iconography, and Contexts (DFG, 2024-27)

- William Lovell digital (DFG, 2024-27)

- From English in Hong Kong to Hong Kong English: A new diachronic approach to genre and varietal developments in (post)colonial contexts (DFG, 2024-27)

- Arthurian literature from the library of the Duc de Nemours (DFG, 2023-26)

- The Seven Sages of Rome: editing and reappraising a forgotten premodern classic from global and gendered perspectives (DFG/AHRC, 2023-26)

- Narragonia Latina. Bilingual hybrid edition of the Latin ‚Ships of Fools' by Jakob Locher (1497) and Jodocus Badius (1505) (DFG, 2022-25)

- Measuring the World by Degrees. Intensity in early modern medicine and natural philosophy (1400-1650) (DFG, 2022-25)

- Camerarius digital (DFG, 2021-24)

- Annotated Corpus of Ancient West Asian Imagery: Cylinder Seals (ACAWAI-CS) (BMBF, 2021-23)

- Thesaurus Linguarum Hethaeorum digitalis (TLHdig) (DFG, 2020-23)

- Kallimachos – Centre for Digital Edition and Quantitative Analysis (BMBF, 2014-19, member of the Narragonien digital project group)

- Richard Wagner Writings. Historical-critical complete edition (Academy Program, 2013--29)

Honors and Awards

- Best Paper Award at the 6th International Workshop on Historical Document Imaging and Processing (HiP) for the paper Mixed Model OCR Training on Historical Latin Script for Out-of-the-Box Recognition and Finetuning

- Shortlist for Best Paper Award at the 3rd International Conference on Digital Access to Textual Cultural Heritage (DATeCH) for the paper Automatic Semantic Text Tagging on Historical Lexica by Combining OCR and Typography Classification

- Award from the Institute of Computer Science at the University of Würzburg for Exceptional Academic Achievements and an Outstanding Master's Thesis

Academic Activities

- Member of IAPR, DAGM, EADH, DHd

- DHd Working Group OCR (Founding Chairman, Convenor 2019-23)

- Joint Organizer of the Working Group "Philology and Digitality" at the University of Würzburg

- Reviewer of research grants for several funding organziations in Germany and Austria

- Reviewer for various journals and conferences in the area of Artificial Intelligence, Pattern Recognition, and Digital Humanities; among others IJDAR, DHQ, JOCCH, JDMDH, JLCL, ICPR, VISAPP, DATeCH, HiP, QURATOR, EADH, CHR, and LREC

-

Open Source Handwritten Text Recognition on Medieval Manuscripts using Mixed Models and Document-Specific Finetuning. . In 2022 15th IAPR International Workshop on Document Analysis Systems. 2022.

-

Mixed Model OCR Training on Historical Latin Script for Out-of-the-Box Recognition and Finetuning. . In 6th International Workshop on Historical Document Imaging and Processing. 2021.

-

One-Model Ensemble-Learning for Text Recognition of Historical Printings. . In Proceedings of the 16th International Conference on Document Analysis and Recognition ICDAR 2021. 2021.

-

Calamari - A High-Performance Tensorflow-based Deep Learning Package for Optical Character Recognition. . In Digital Humanities Quarterly, 14(2). 2020.

-

Automatic Semantic Text Tagging on Historical Lexica by Combining OCR and Typography Classification. . In Proceedings of the 3rd International Conference on Digital Access to Textual Cultural Heritage. 2019.

-

OCR4all - An Open-Source Tool Providing a (Semi-)Automatic OCR Workflow for Historical Printings. . In Applied Sciences, 9(22). 2019.

-

Improving OCR Accuracy on Early Printed Books by Utilizing Cross Fold Training and Voting. . In 2018 13th IAPR International Workshop on Document Analysis Systems. 2018.

-

Improving OCR Accuracy on Early Printed Books by combining Pretraining, Voting, and Active Learning. . In JLCL: Special Issue on Automatic Text and Layout Recognition, 33(1), pp. 3–24. 2018.